Once a web request traveled through the Internet and reached the server, it can now be processed, right? Not so fast! In a production setup, the request does not reach the application right away. We will introduce the different services that support a web application: Content Delivery Networks, Load Balancers, Reverse Proxies, Web Servers, and Application Servers.

Why do we need all of this?

Technically, it is possible to connect an application directly to the Internet and start serving requests. While this will suffice for a small Internet-of-Things home service, this is going to be problematic in a real-world production use.

There are several reasons why we need additional components before a web request reaches the application. Some of these are:

- Concurrency – How many requests can the application process at the same time? If the application cannot handle the traffic, then the user will be shown an error or a timeout page in the browser.

- Scalability – Can the application handle a surge of traffic? Having a way to automatically increase or decrease the capacity of the application to handle requests is critical in a production system.

- Cost – Cloud providers usually charge for outgoing bandwidth from the application. Caching can reduce the cost of bandwidth, especially for global traffic. Some activity are also time-based, with demand peaking at certain hours in a day. Reducing the number of servers during low-traffic hours can help save money.

- Availability – How resilient is the application when one of the servers go down? Does a power outage on the server location greatly disrupt operations, or does it have fail-over mechanisms to gracefully handle this scenario?

These concerns can be addressed by the different services and components shown in the next sections.

Content Delivery Network

The time it takes for a request to travel to a server (and back) depends on where the request originated relative to the server’s location.

If the request (such as from a browser) is geographically close to the server, then generally it results in faster communication between the two systems. However, servers are usually placed on or near the location of the company or the service provider. For instance, if you are accessing a website of a US company from Asia, then your request travels through the Pacific Ocean via the Pacific submarine cable system. For applications requiring low latency, this can be a problem.

Content Delivery Networks (CDN) solve this by providing delivery of assets through the Internet in geographically-distributed servers. This means that users accessing a web application can load the HTML, images, videos and JavaScript files from a data center nearest their location.

CDNs work best for static assets or files that do not change too much. This is because the purpose of a CDN is to cache the content, not host them.

Advantages to using a CDN

- Improve load times – the files originate from servers nearest to the browser/user’s location.

- Reduce bandwidth costs – as the files are cached, you do not need to pay data transfer costs between servers and the browser. You only need to pay the egress traffic cost from the CDN.

- Increased availability – CDNs can cache your content from multiple geographic locations, and provide redundancy if the asset servers (or other CDN locations) incur downtime.

- Security – CDNs such as CloudFlare also provide Distributed Denial-of-Service (DDoS) protection. This is useful as these requests do not go through the actual servers and so CDNs can serve as a “shield” against DDoS attacks.

Examples of commercial CDNs are CloudFlare, Amazon CloudFront, Akamai, and Fastly CDN.

Load Balancer

Servers can only handle a limited amount of traffic, depending on the available memory and CPU and how much resource the application needs to run. If the traffic becomes too large to handle, servers can become unresponsive, resulting in downtime.

A Load Balancer (LB) addresses this problem by allowing multiple servers to handle requests. Its main task is to distribute requests among a group of servers, thus preventing overload on any single server. If a server becomes unresponsive, LBs can mark them as “unhealthy” and divert requests to “healthy” ones. This greatly increases the availability of your application even with a large amount of traffic.

There are many methods that Load Balancers can use to distribute traffic to servers. Some common ones are Round Robin (requests are distributed in a given order) or Least Traffic (requests are given to servers with the least amount of active connections).

Examples of Load Balancers are HAProxy, which is an open-source library, and AWS Elastic Load Balancer (ELB), which is a commercial one.

Reverse Proxy

A server needs to be connected to the Internet before it can serve requests to users. This means that they can be accessed by anyone as long as they are online. Exposing your application server to the public may not be a good idea, for the following reasons:

- If you need to take down a server for maintenance, this will take the entire web application down as well.

- Adding new servers will be problematic as the IP address (resolved from the domain) is attached to a specific server.

- Anyone can determine the server set up and configuration by sending messages to the server, potentially opening up avenues for an attack or hack.

Due to these reasons, a Reverse Proxy is used for Web applications. Instead of connecting the application servers directly, you instead use a Reverse Proxy between your servers and the public Internet.

Advantages to using a Reverse Proxy

- Security – since the Reverse Proxy is the service directly connected to the Internet, the server information is not exposed to the public.

- Flexibility – you can safely modify the underlying configuration of your systems and add/remove components as the architecture of the servers are not exposed.

- TLS Termination – to save resource on the application servers, requests can be decrypted in the Reverse Proxy itself.

Today, most of the features of a Reverse Proxy are also present in Load Balancers, blurring the lines between these two systems. Examples of Reverse Proxies are Nginx, Apache, HAProxy, and CloudFlare.

Web Server

Web Servers serve as an intermediary between the application and the Internet. Although you can use it exclusively in your application, in practice, a Load Balancer/Reverse Proxy is added in front of the Web Server.

As its name implies, a Web Server is only used for HTTP requests. It can accept HTTP(S) requests, respond via HTTP, and then log the request and response data. Web Servers also provide additional features, such as:

- Authentication

- Throttling and traffic control

- Large file support

Some popular examples of Web Servers are Nginx and Apache.

Application Server

Some applications, such as Ruby, need an Application Server in order to communicate via HTTP. An Application Server is a more specialized form of a Web Server that supports specific applications or frameworks. These are normally used in conjunction with a Web Server.

For Ruby, some examples of Application Servers are Webrick, Passenger, Unicorn, and Puma.

How it all fits

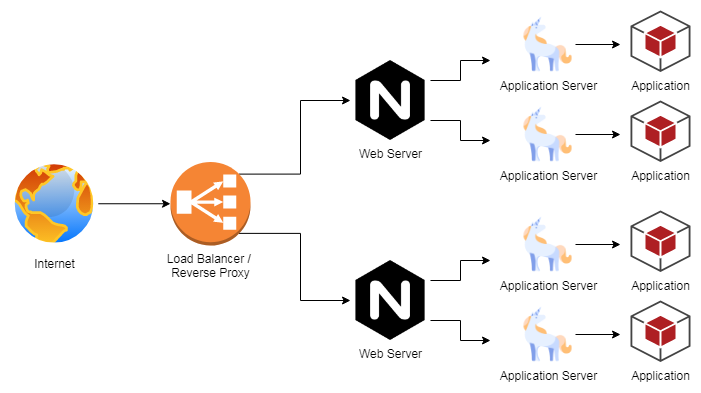

This simple diagram gives a clearer view on how all of these services are used in a standard web application:

A Load Balancer and/or a Reverse Proxy is used between the server(s) and the public Internet. Multiple servers can then be utilized behind the Load Balancers, with each having a Web Server (e.g. Nginx) that receives and serves HTTP requests. Each Web Server can handle traffic through multiple processes of the Application Server (e.g. Unicorn). The Application Server then connects your Application (e.g. Ruby) with the Web Server.

One thought on “Web Development Basics: Server”